The Rise of AI Music: Exploring DeepMind’s Lyria Model

AI music generation has reached new heights with the introduction of Lyria, a groundbreaking model developed by Google DeepMind. Lyria aims to revolutionize the way music is created by generating instrumental and vocal arrangements with improved continuity across song sections. This innovative technology opens up a world of possibilities for musicians and producers.

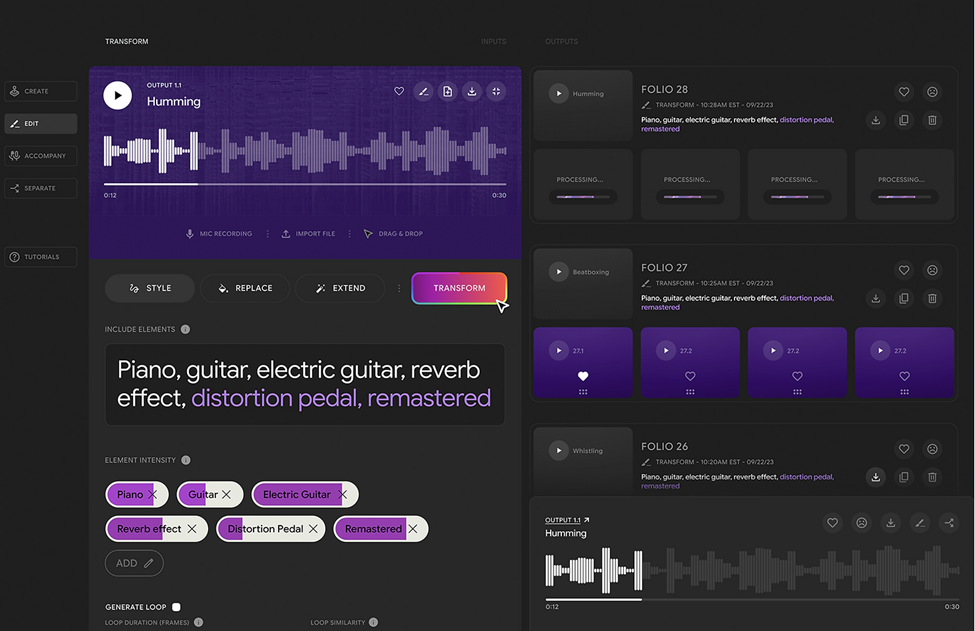

With the Lyria model, AI-generated music can be used as soundtrack music for social videos, backing tracks or re-created with MIDI and new sound design techniques. This allows artists to explore unique compositions and experiment with different musical styles. The quality of the music generated by Lyria is truly impressive, showcasing the advancements made in AI music generation. That being said, and as explained in our RipX DAW for AI Music Makers blogpost if users require further customization of the AI Generated Music, they can always use a program like RipX DAW to further customize, correct, edit, re-arrange and re-develop the audio.

RipX DAW Interface | Credit: hitnmix.com

One notable example of Lyria’s capabilities is its collaboration with YouTube Shorts to create Dream Track. Dream Track is an AI experiment that generates soundtracks in the style of popular artists like Alec Benjamin, Charlie Puth, and Sia. This partnership demonstrates how AI music tools like Lyria can replicate the musical essence of established artists, providing a source of inspiration for aspiring musicians.

The release of Lyria marks a significant milestone in AI music production. Its ability to generate high-quality instrumental and vocal arrangements paves the way for new creative possibilities in the music industry. As we delve deeper into the features and applications of Lyria, we will uncover its potential to shape the future of music creation.

The Evolution of AI Music

Challenges in AI Music Creation

Creating compelling music has always been a challenge for AI systems due to the complexity and nuances of human expression. The ability to capture the emotions, dynamics, and subtleties that make music truly captivating has been a significant hurdle for AI-generated compositions. However, recent advancements in deep learning and neural networks have propelled AI music generation forward.

With the development of sophisticated algorithms and powerful computational capabilities, AI music generation has made remarkable progress in recent years. These advancements have allowed AI models to analyze vast amounts of musical data, learn patterns, and generate compositions that exhibit a level of creativity previously unseen.

Introduction of DeepMind’s Lyria Model

Google DeepMind has developed the Lyria model specifically for AI music generation. Lyria introduces two groundbreaking experiments: Dream Track and Music AI tools. These experiments showcase the immense potential of generative music technologies and their ability to shape the future of music creation.

Dream Track is an exciting collaboration between Google DeepMind and YouTube Shorts. It leverages the power of Lyria to generate soundtracks in the style of popular artists mentioned above + many more on the way. This experiment demonstrates how AI can replicate the musical essence of established artists, providing a source of inspiration for aspiring musicians.

The Music AI tools introduced by Lyria offer musicians and producers a new way to approach composition. By leveraging deep learning techniques, these tools enable users to explore different musical ideas quickly. They provide suggestions for chord progressions, melodies, harmonies, and even lyrics based on user input or existing compositions. This empowers artists with new creative avenues while maintaining their unique artistic vision. Although the exact features remain relatively untested in the marketplace, the possibilities are exciting.

The introduction of DeepMind’s Lyria model represents a significant leap forward in AI music generation. Its ability to overcome challenges in capturing human expression through music showcases the potential for further advancements in this field. As we delve deeper into Lyria’s features and applications, we will discover how it addresses the continuity and arrangement challenges faced by AI-generated music.

Exploring Lyria’s Features and Applications

Lyria Dashboard | Credit: Google Deepmind Blog

Continuity and Arrangement

Lyria addresses one of the key challenges in AI-generated music by focusing on continuity and arrangement. This innovative model aims to create seamless transitions between different sections of a song, allowing musicians and producers to develop cohesive compositions with improved flow and structure. By ensuring smooth transitions, Lyria enhances the listening experience and provides a more polished result.

The continuity feature of Lyria is particularly valuable for musicians who want to create dynamic compositions that evolve naturally. It allows for smoother progressions between verses, choruses, bridges, and other song sections. With Lyria’s assistance, artists can explore new musical ideas while maintaining a consistent theme throughout their compositions.

SynthID: A Response to Legal Pressures

SynthID converts audio into a visual spectrogram to add a digital watermark.

As AI-generated music gains popularity, concerns about copyright infringement and ownership rights have emerged within the music industry. In response to these legal pressures, Google has introduced SynthID as an inaudible watermarking system. SynthID serves as a means to identify and track generative AI audio, ensuring transparency and accountability in the use of AI music tools.

By implementing SynthID, Google DeepMind aims to address concerns regarding the originality and ownership of AI-generated music. This technology enables creators to protect their work while also providing a mechanism for tracking its usage. SynthID acts as a safeguard against unauthorized distribution or plagiarism of AI-generated compositions.

The introduction of SynthID demonstrates Google’s commitment to responsible use of AI music tools. It not only protects the rights of artists but also fosters trust within the industry by establishing clear guidelines for the ethical utilization of generative music technologies.

The Future of AI Music Creation

Growing Popularity of AI Music Tools

AI music and AI music-making production methods are experiencing a surge in popularity within the music industry. A study conducted reveals that nearly 30% of indie artists are already utilizing AI music tools, highlighting the increasing adoption of this technology. These tools offer musicians new ways to explore their creativity and enhance their compositions.

However, it is worth noting that over half of musicians would conceal their use of AI music tools. This suggests that there is still a need for further acceptance and understanding of AI-generated music. As the technology continues to evolve, it is essential for artists to embrace these tools openly and recognize the potential they hold for innovation and artistic growth.

The Role of AI in Music Production

The rise of AI music generators has sparked discussions about the potential replacement of human producers. According to a survey, an astonishing 73% of producers believe that AI music generators could eventually replace them. While this may raise concerns about job security, it is important to acknowledge the unique creativity and intuition that human producers bring to the table. As mentioned earlier, there are cutting-edge softwares such as RipX DAW that provide a third alternative; humans collaborating effectively & efficiently with AI.

AI can undoubtedly enhance and streamline various aspects of music production processes. It can assist with tasks such as generating ideas, suggesting chord progressions, or even creating instrumental accompaniments. However, the human touch remains invaluable when it comes to making artistic decisions, interpreting emotions, and infusing personal experiences into musical compositions.

Rather than viewing AI as a threat, it is more productive to see it as a powerful tool that complements human creativity. By embracing the possibilities offered by AI in music production, artists can leverage its capabilities while retaining their individuality and artistic vision.

Unleashing the Potential of DeepMind’s Lyria Model

DeepMind’s Lyria model represents a significant step forward in AI music generation, addressing challenges in continuity and arrangement. Furthermore, the introduction of SynthID by Google DeepMind ensures transparency and accountability in the use of AI-generated music. This addition addresses mounting legal pressures surrounding copyright and ownership of AI-generated music, providing a framework for responsible usage.

While AI music tools are gaining popularity, it is crucial to recognize the irreplaceable role of human creativity and intuition in music production. The adoption of AI by artists demonstrates its growing acceptance within the industry. However, it is important to remember that these tools should be seen as enhancers rather than replacements for human artistic expression.

As we continue to explore the potential of DeepMind’s Lyria model and other AI music technologies, it is essential to strike a balance between leveraging the capabili ties they offer while preserving the unique qualities that make human-created music so special.

Try RipX DAW PRO Free for 21 Days Learn About RipX DAW Learn About RipX DAW PRO

If people are still afraid to admit that they are using A.I. for production, who would want their audio riddled with waveform watermarks?

I get why you would want to do this with your own watermark, already a thing.

But having someone else’s watermark on my stuff?

No thank you very much.

It is not a tool for “creativity”. It is an insidious reduction of the human form of art into an infinite copy & paste of the “soul”. Now the imitation of the human is good enough to make the human unnecessary, what is “art”? What is human? What is music?

It is pleasant noise without meaning, stolen from the creator.

We are undone.

We see AI as one part of a musician’s toolset, aiding the creative process, not replacing it. The experience and talent of a real human musician is still needed to make the best use of the tools available and to create truly original and enjoyable music.