The Fascinating World of AI Music

Artificial Intelligence (AI) has revolutionized various industries, and the world of music is no exception. AI music, also known as machine-generated music, has the potential to transform the way we create and experience musical compositions. This emerging field combines the power of technology with artistic expression, opening up new possibilities for musicians, producers, and listeners alike.

AI music encompasses a wide range of applications, from composing original pieces to generating background tracks for videos and games. It offers endless opportunities for experimentation and exploration in musical creativity. By leveraging advanced algorithms and deep learning techniques, AI can analyze vast amounts of musical data and generate compositions that mimic different genres or styles.

The impact of AI music on the industry is profound. It allows musicians to break through creative barriers by providing inspiration and generating ideas that may not have been possible otherwise. Additionally, it offers a cost-effective solution for content creators who need high-quality music without the need for extensive human involvement.

As AI continues to evolve, so does its influence on the world of music. From assisting composers in their creative process to enhancing live performances with real-time improvisation, artificial intelligence is reshaping how we perceive and interact with music.

Humanizing AI Music: Overcoming Challenges

Challenges in Making AI Music Sound Human

Creating authentic and emotional AI-generated music poses several challenges. While AI algorithms excel at analyzing patterns and generating music based on existing data, capturing the essence of human emotion and expression is a complex task.

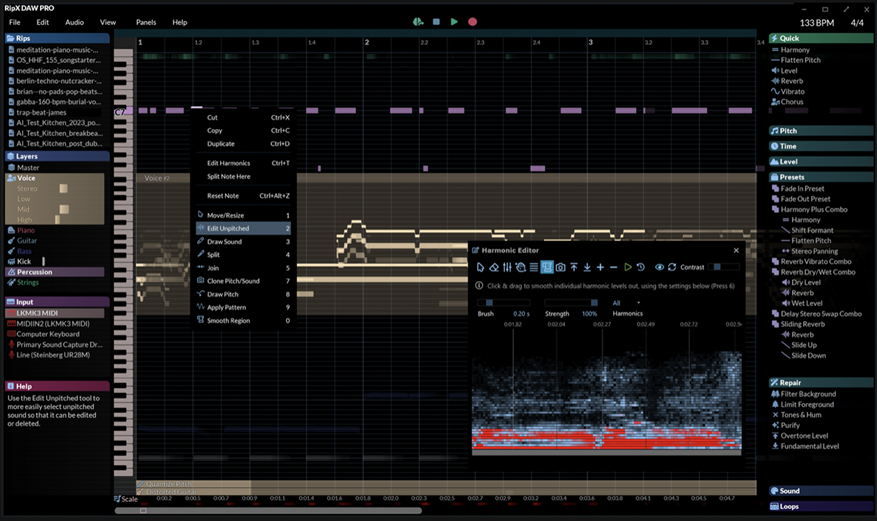

One of the main difficulties lies in infusing human-like qualities into AI music. Music has always been deeply rooted in human experiences, emotions, and cultural contexts. To make AI music resonate with listeners, it needs to evoke similar emotions and connect on a personal level. This is where new software like RipX DAW comes into play, allowing Humans to take ‘robotic’ AI generations and manipulate them with a human feel (and brain), creating an end product that feels a lot more relatable to the human ear.

Another challenge is achieving the level of nuance and improvisation that human musicians bring to their performances. Human musicians can adapt their playing style, add subtle variations, and respond to the energy of a live audience. Replicating these nuances in machine-generated music requires advanced algorithms capable of simulating the intricacies of human musical expression.

Furthermore, there is a risk of AI music sounding repetitive or formulaic. Since AI models learn from existing compositions, they may inadvertently reproduce familiar patterns without introducing enough originality or innovation. Striking a balance between familiarity and novelty is crucial for creating engaging and captivating AI-generated music. Platforms like Boomy and Soundful allow basic editing of structure within their AI generations, but are just scratching the surface of truly unique composition shape.

Despite these challenges, researchers and developers are actively working towards overcoming them. By incorporating techniques such as reinforcement learning and generative adversarial networks (GANs), they aim to enhance the expressiveness and authenticity of AI-generated music.

Exploring AI Production Tools for Authentic Sound

Introduction to AI Production Tools

AI production tools play a vital role in the creation of music, offering innovative solutions and expanding the creative possibilities for artists. These tools leverage artificial intelligence to assist musicians, producers, and composers in various aspects of music production.

One of the key benefits of using AI production tools is their ability to generate authentic sound. By analyzing vast amounts of musical data, these tools can learn patterns, styles, and genres, allowing them to create compositions that closely resemble human-made music. This opens up new avenues for experimentation and exploration in music creation.

Additionally, AI production tools provide valuable assistance throughout the entire music production process. They can help with tasks such as melody generation (DopeLoop), chord progression suggestions (ChordChord), and even automated mixing and mastering (Roex, Landr). This streamlines the workflow for musicians and allows them to focus more on their artistic vision.

Music Language Models (LM)

Music Language Models (LM) are a specific type of AI tool designed for generating musical compositions. These models are trained on large datasets of existing music and can generate new melodies, harmonies, and rhythms based on the learned patterns.

The concept behind LM is similar to natural language processing but applied to music instead. By understanding the underlying structure and grammar of different musical genres or styles, LM can create original compositions that align with specific musical conventions.

The advantages of using LM in AI music production are numerous. It provides a starting point for composers who may be experiencing creative blocks or seeking inspiration. Additionally, it offers a collaborative element by allowing musicians to interact with the model’s generated ideas and build upon them.

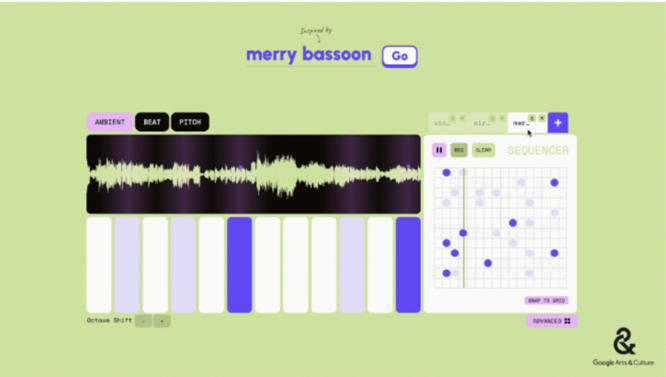

With LM’s ability to generate diverse musical phrases and motifs, it adds a layer of complexity and depth to AI-generated music. Musicians can experiment with different variations and combinations suggested by LM, resulting in unique compositions that blend human creativity with machine-generated assistance. This is exemplified in Google’s MusicLM ‘Instrument Playground’ that was released recently, which allows users to generate a 20 second audio loop composed by inputting a text descriptor + instrument.

DeepMind’s Lyria and Stable Audio: Revolutionizing Music Creation

DeepMind’s Lyria

While we are talking about Google, DeepMind‘s Lyria is an advanced AI music tool that has made significant contributions to the field of music production. Lyria combines cutting-edge machine learning techniques with a deep understanding of musical theory, enabling musicians and composers to explore new creative possibilities.

Lyria offers a range of capabilities and innovations that enhance music production. It can generate melodic ideas, harmonies, and even entire compositions based on user input or learned patterns from existing music. This allows artists to quickly iterate and experiment with different musical ideas, accelerating the creative process.

One of the key strengths of Lyria is its ability to foster collaboration in AI music. Musicians can interact with the generated content, modify it according to their artistic vision, and seamlessly integrate it into their compositions. This collaborative approach between human creativity and AI assistance opens up exciting avenues for co-creation and exploration in music production.

Stable Audio

Stable Audio stands out as a groundbreaking force in the intersection of music and technology. With its innovative approach and ethically sourced training data, Stable Audio offers solutions that blend advanced audio engineering with the latest tech trends to enhance the music creation experience. Their offerings include high-fidelity audio, full length tracks, and individual audio sample creation.

What sets Stable Audio apart is their commitment to quality, versatility and ethics. By training their models on a data set of over 800,000 songs with detailed descriptions ingrained in them, they are able to output extremely nuanced & detailed audio. This allows users to include descriptors such as Key, BPM and even abstract adjectives to ensure that they receive truly unique and usable outputs. Though it’s not perfect, their model is deeply impressive, and will surely continue to blossom into something powerful.

The Future of DAWs and AI in Music

The Evolution of DAWs with AI Integration

Digital Audio Workstations (DAWs) have been at the forefront of music production for decades, providing musicians and producers with powerful tools to create, edit, and mix music. However, the integration of artificial intelligence (AI) into DAWs is set to revolutionize the way music is produced.

The integration of AI in DAWs opens up a world of possibilities for musicians. AI algorithms can analyze vast amounts of musical data and provide intelligent suggestions for chord progressions, melodies, and arrangements. This assists artists in overcoming creative blocks and discovering new musical ideas that they may not have considered otherwise. RipX DAW, the world’s first AI DAW, takes these tools a step further by implementing features like Stem Separation, Note-by-Note editing of each separated stem and even full blown instrument replacement- allowing unparalleled customization to any existing track, including AI Generations.

One potential benefit of AI integration in DAWs is the ability to automate time-consuming tasks (i.e. RipX DAW PRO’s RipScripts). AI algorithms can generally assist with lots of audio editing and noise reduction tasks, even automatically adjusting levels during mixing and mastering. This allows musicians to focus more on their artistic vision rather than getting caught up in technical details.

As technology continues to advance, we can expect further advancements in the integration of AI within DAWs. From more sophisticated machine learning algorithms to enhanced natural language processing capabilities, the future holds immense potential for empowering musicians with intelligent tools that augment their creativity.

Humanizing AI Music: A Creative Journey

Humanizing generative AI music is a fascinating and ongoing creative journey. As we explore the possibilities of AI music, it becomes essential to add authenticity and emotion to the compositions generated by these algorithms.

To humanize AI music, musicians and producers can employ various techniques. They can experiment with adding subtle variations, dynamics, and expressive nuances to the AI-generated melodies. By infusing personal touches and artistic interpretations, they can breathe life into the machine-generated compositions.

Additionally, incorporating real instrument recordings or utilizing high-quality virtual instruments can enhance the authenticity of AI music. This combination of human performance and AI assistance creates a unique blend that resonates with listeners on a deeper level.

As technology advances, new techniques and tools will continue to emerge, allowing us to push the boundaries of what is possible in humanizing AI music. With creativity as our guide, we embark on an exciting journey of exploring the intersection between artificial intelligence and musical expression.